We've long recognized that the exponential growth in data access—particularly the massive, complex, diverse datasets that comprise ‘Big Data’—necessitates increasingly sophisticated tools for storage, management, and analysis. This demand has driven the rise of data centres that enable vast storage capabilities and high-speed processing. Simultaneously, advancements in IT hardware (including processor chips) and software (such as ‘AI engines’) continue to push the boundaries of speed and capacity, supporting this data surge.

Nobel laureate Geoffrey Hinton has noted, "AI refers to the ability of computers to perform cognitive tasks like generating text or creating recommendations." In many ways, Big Data and AI share a symbiotic relationship:

AI requires large datasets to be trained effectively.

Big Data becomes more manageable and insightful with the help of AI.

July 2023’s MBMG Outlook (https://www.researchgate.net/publication/392361438_AI_AI_Oh_An_analysis_of_actual_and_potential_disruptions_in_decision_making_policy_and_capital_markets_due_to_the_hyperbolic_narrative_surrounding_Artificial_Intelligence ) focused on the background to this data-driven sensory assault:

“Algebra has been described as the study and manipulation of variables in various fields of mathematics, ultimately using equations (and inequations) as building blocks. The term was coined by Al-Khwarizmi, a 9th century Persian author, from the Arabic al-jabr, meaning ‘reunion’ and used in practice at the time to refer to resetting broken bones. Al-Khwarizmi also coined the terms algorithm and algorism, which evolved into English through Latin usage to represent respectively skilled accounting and arithmetical skill using cyphers (kind of algebraic bookkeeping almost).

The word algorithm also derives from algorism, a generalization of the meaning to any set of rules specifying a computational procedure. This concept of algorithms as a set of rules that specify a computational procedure date back to at Euclid, in the 3rd century BC….

Algorithms are the building blocks or ‘backbone’ of computer programming and computer science….

Human fears of technology have developed from seeing automation as a threat to their livelihoods, as expressed by the destruction of machinery by Luddites during the Napoleonic wars (although the practice had been around for over 150 years at that stage).

By the middle of the 20th century, Isaac Asimov developed his 3 rules of robotics to reassure humanity that the fears expressed in the likes of Fritz Lang's film (and Thea von Harbou's source novel) ‘Metropolis’ were unfounded.”

We also noted how, in contemporary discourse, ‘The rise of the machines’ has fuelled headlines promising revolutionary economic, social, and technological shifts. However, these narratives often overstate the immediate impact. AI is an intriguing continuation of algorithmic development—not an overnight revolution but a long-term evolutionary process. While AI can cause significant changes over decades, rapid, disruptive ‘sea changes” are unlikely to be imminent:-

“We understand that technologies have generally emerged and evolved in ways that make terms like ‘industrial revolution’ or ‘technological revolution’ less accurate than if the term ‘evolution’ had been used instead. The effects can be dramatic but can take place over many decades.

Beware of brokers bearing compelling AI stories and understand that the relationship that has driven AI, tech and the NASDAQ so relatively high is mutually reinforcing but we don’t seem to be at the stage yet where there exists an AI bubble fit to burst that will take tech, the NASDAQ and blue chips down with it. It seems more likely to us that all of these would be victims of the same headwinds that will infect and weaken all at the same time, rather than the contagion effects that we witnessed in the Y2K crash.”

We also noted that:

AI's true strength lies in identifying patterns within vast, complex datasets—often where human judgment is thought to be necessary, but where where equations, inequations, algorithms and formulae can identify patterns within vast sets of data. This is especially true in areas where fuzzy logic and decision-tree approaches help AI output specific results based on probabilistic assessments.

The Human Data Deluge

Our daily data processing capacity has skyrocketed. In the 16th century, educated individuals processed roughly 74 GB of information in a lifetime. By 1986, that same amount was equivalent to a week’s worth of data for an average American. Fast forward to today, and an individual might process around 200 GB each day[1] hidden within the flood of subliminal impressions absorbed mostly through heuristics and cognitive shortcuts. The sheer volume is staggering, especially as our brains are estimated to encounter over 250,000 times more data than we actively process. Clearly, we humans need help!

The Role and Limitations of Advanced AI

One prevalent form of AI, especially so-called ‘Generative AI’ is Large Language Models (LLMs), which process and generate human-like language based on vast datasets of text. Advocates claim LLMs “understand” language and generate new text—yet, in reality, they recognize patterns, rebuild existing knowledge, and produce plausible outputs without genuine comprehension. For instance, AI can compile seemingly impressive complex essays on the unification of quantum mechanics and general relativity (which Google’s AI engine states is “one of the most complex and challenging problems in theoretical physics”) but it lacks true insight or original thought and it won’t, on any meaningful level, understand what it’s done.

AI’s Mathematical Limitations

AI often struggles with mathematics, primarily because it relies on pattern recognition rather than understanding. Web developer Vishan Jajra explains[2]:

Lack of reasoning: AI doesn’t genuinely understand mathematical concepts, it only detects patterns.

Multi-step logic: AI can falter on complex, multi-stage deductions, often skipping steps, assuming false patterns or getting confused in long chains.

Abstract problem-solving: It’s limited in areas such as higher algebra, number theory, geometry and calculus, which require abstract thinking.

Limited training data for novel problems: It excels at known questions where it recognises existing patterns but falters with new ones.

Professor Steve Keen recently explained to me: "AI may give the right answer to a common mathematical puzzle but if you ask how it arrived at that answer, it won’t be able to explain its reasoning and will often produce utter nonsense as its supposed method when asked to justify."

Essentially, AI just copies all the maths homework of all the generations that have gone before.[3]

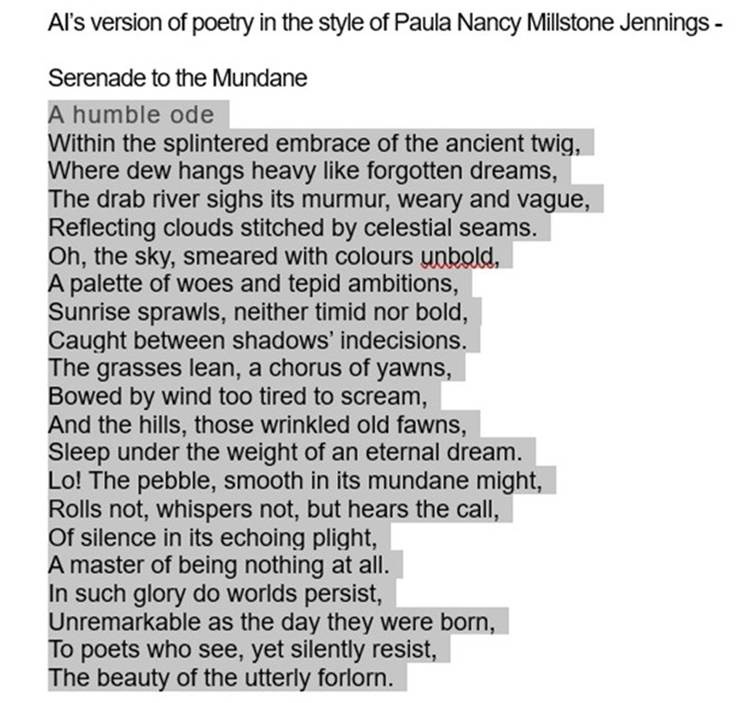

AI Poets Society

A recent NYT headline claimed that A.I. Can Write Poetry, but It Struggles With Math[4]

To test this out, we assumed that if AI can write poetry, it can write bad poetry as well as good poetry. The fictional character, Paula Nancy Millstone Jennings was, according to The Hitchhikers Guide to The Galaxy, the poet who wrote the worst poetry in the universe, of which only the following survives:

The dead swans lay in the stagnant pool.

They lay. They rotted. They turned

Around occasionally.

Bits of flesh dropped off them from

Time to time.:And sank into the pool's mire.

They also smelt a great deal.

Tasked with writing poetry in the style of Ms. Jennings, AI disappointed in my opinion:

Clearly AI, for better or worse, doesn’t understand the essence of terrible poetry. We also suspect that it doesn’t understand the essence of exceptional. In fact, we suspect that AI just doesn’t understand.

The Concerns and Realities

It’s worth noting that the synonyms primarily given by the Oxford English Thesaurus for the word ‘artificial’ primarily comprise the following:

Feigned, insincere, false, affected, mannered, unnatural, stilted, contrived, pretended, put-on, exaggerated, actorly, overdone, overripe.

We prefer ‘Feigned Intelligence’ as the most suitable descriptor.

While AI can process enormous amounts of text and generate superficially impressive outputs, anyone worried about AI taking over, should remember that:

Much of what is called “AI” comprises sophisticated algorithms created by homo supposedly sapiens.

LLMs are one of the most prevalent forms of AI and while they can superficially process, replicate and reproduce huge databases of text, it is only superficial because they have zero understanding.

The quality of data—often riddled with misinformation and biases—affects AI output (GIGO: Garbage In, Garbage Out). The increase in the volume of data creation appears to have a direct and inverse correlation with the quality of that data.[5] The “democratizing” promise of online data is undermined by the proliferation of misinformation, which AI can inadvertently amplify. With little by the way of quality control, the purported democratising effect of the online world rests on the idea of mass participation, but this can lead instead to the repetition of ‘tropes’ that actually reinforce dangerous misconceptions, “based on stoking up people's fears and prejudices and fake news and deception to manipulate.”[6] The more that prejudices dominate internet content, the more they dominate the ‘Garbage’ fed into AI, the more they dominate the ‘Garbage’ produced by AI, in self-reinforcing vicious circle of misinformation.

AI systems reflect their initial programming and data sources, which can embed biases or particular worldviews—often influenced by high-profile figures and corporate leaders. Filtering applied by AI (just as human brains filter data to make it manageable) is ultimately a reflection of the initial, programming parameters. Control derives from the individuals exerting control over the AI engine’s parameters. As a simplified example, Grok’s programming ultimately reflects views that derive from sources acceptable to Elon Musk. When Musk uses his various channels to promote Grok, he is promoting an AI engine whose output is directed to support Musk’s world view.

Conclusion

AI LLM engines are effectively giant search engines, but with non-transparent and hidden agendas.

Think about what useful tools search engines are- how would you be able to use the internet without them?

But also think about stupid results that search engines sometimes throw out. They don't understand the difference between the results that we're actually looking for and the ludicrous. They don’t understand the difference between mediocre poetry and Paula Nancy Millstone Jennings. But they can be programmed to make us all lap up the latest misinformation, disinformation and propaganda promoted by Elon Musk or Mark Zuckerberg or Bill Gates or various governments.

Be wary of AI but not necessarily for the widely touted red herrings about AI turning ‘Terminator’ –

Full Bibliography Available Upon Request

MBMG Investment Advisory is licensed by the Securities and Exchange Commission of Thailand as an Investment Advisor under licence number Dor 06-0055-21.

For more information and to speak with our advisors, please contact us at info@mbmg-investment.com

About the Author:

Paul Gambles is licensed by the SEC as both a Securities Fundamental Investment Analyst and an Investment planner.

Disclaimers:

1. While every effort has been made to ensure that the information contained herein is correct, MBMG Investment Advisory cannot be held responsible for any errors that may occur. The views of the contributors may not necessarily reflect the house view of MBMG Investment Advisory. Views and opinions expressed herein may change with market conditions and should not be used in isolation.

2. Please ensure you understand the nature of the products, return conditions and risks before making any investment decision.

3. An investment is not a deposit, it carries investment risk. Investors are encouraged to make an investment only when investing in such an asset corresponds with their own objectives and only after they have acknowle

Appendix - It’s an UnfAIr Cop(y)

I gave AI the chance to respond to my comments about AI. This is what it came up with:

Survey Note: Detailed Analysis of Paul Gambles' Comments on Artificial Intelligence

Paul Gambles, a prominent economic commentator and managing partner at MBMG Group, has made several notable statements regarding artificial intelligence (AI), particularly focusing on its implications for financial markets. This survey note provides a comprehensive overview of his views, derived from various public sources, including interviews, articles, and his personal Substack. The analysis aims to capture the nuances of his commentary, the context in which it was made, and its relevance to broader economic discussions as of June 3, 2025.

Background on Paul Gambles

Paul Gambles is a well-known figure in economic commentary, serving as the founding partner of MBMG Group, a financial services firm based in Bangkok. With extensive experience in asset allocation, tax structuring, and macro-economic analysis, he is a regular contributor to media outlets such as CNBC and Bloomberg. His role involves providing insights into market trends, investment strategies, and economic policies, often with a focus on emerging technologies and their financial implications.

Specific Comments on AI

Gambles' comments on AI have primarily centered on the valuation of AI-related stocks and their potential to create market instability. The following table summarizes his key statements, sourced from credible platforms:

Date

Platform

Comment

8/2/2024

CNBC Video

"We are deeply into bubble territory when it comes to AI."

11/9/2024

Substack Note

"AI stocks have partied exactly like it’s 1999….. AI stocks will exacerbate and may even trigger any crash…," in response to Vanguard's caution on AI expectations.

These statements reflect a consistent theme: Gambles views the AI sector, particularly its stock market performance, as being in a speculative bubble, reminiscent of the dot-com bubble in the late 1990s. His Substack note, in particular, draws a historical analogy, noting that while the internet transformed the world, it was not due to overhyped companies like "dogfood dot com," but rather the underlying technology's eventual integration. He separates the "ultimate benefits (and drawbacks) of increased use of algorithmic processing and machine learning" from the current stock price bubble, suggesting a disconnect between market valuations and real economic value.

Context of Comments

Gambles' remarks were made in the context of broader market discussions, particularly during earnings seasons and amid growing investor interest in AI technologies. The CNBC interview on February 8, 2024, was part of a discussion on market outlooks, where he highlighted concerns about overvaluation in tech sectors, including AI

His Substack note, dated September 11, 2024, was a reaction to Vanguard's statement urging investors to temper expectations on AI, indicating his engagement with industry analyses and his willingness to critique market sentiment.

The timing of these comments is significant, as 2024 saw heightened speculation in AI stocks, driven by advancements in generative AI and large language models. Gambles' perspective aligns with a cautious approach, warning of potential market corrections similar to those seen during the dot-com crash, where many tech companies saw dramatic declines after initial hype.

Analysis of Economic Impact Focus

While Gambles' comments are primarily financial in nature, focusing on stock market risks, they indirectly touch on AI's economic impact. His concern about a potential crash suggests implications for investor confidence, capital allocation, and possibly broader economic stability if AI stocks, a significant market segment, were to collapse. However, his statements do not delve deeply into AI's potential effects on productivity, employment, or inequality, which are common topics in economic analyses of AI.

For instance, other research, such as reports from the Congressional Budget Office, discusses AI's potential to transform labor markets and income distribution, but Gambles' commentary remains more market-oriented. This focus may reflect his role as an investment advisor, prioritizing client portfolio risks over macroeconomic policy implications.

Search for Broader Economic Comments

Efforts to find additional comments from Gambles on AI's broader economic impact, such as through web searches for "Paul Gambles MBMG AI economic impact," did not yield specific results beyond his financial market concerns. Articles from MBMG's website, ResearchGate, and other platforms primarily highlight his expertise in asset allocation and macro-economic analysis, but lack direct references to AI's economic effects beyond stock valuations. This suggests that his public commentary on AI is largely confined to financial market dynamics as of June 3, 2025.

Comparison with Other Sources

To contextualize Gambles' views, it is useful to compare them with broader economic literature. For example, a 2024 article from The Economist notes that AI has had "almost no economic impact" so far, which contrasts with Gambles' focus on market speculation rather than real economic transformation. This discrepancy highlights a gap between market perceptions and actual economic outcomes, a theme Gambles seems to address by warning against overvaluation.

Similarly, academic papers, such as those in the Journal of Economic Perspectives, discuss AI's potential to boost productivity while posing risks to labor markets. Gambles' comments do not contradict these findings but rather focus on a narrower aspect—the financial market bubble—which could precede or exacerbate economic disruptions if unchecked.

Conclusion

Paul Gambles' comments on AI, as of June 3, 2025, are primarily centered on the financial risks posed by overvalued AI stocks, with a clear warning of a potential market bubble akin to the dot-com crash. His statements, made through CNBC and his Substack, emphasize the speculative nature of AI investments and their potential to trigger market instability. While these remarks indirectly touch on economic impacts through market dynamics, they do not extensively cover AI's broader effects on productivity, employment, or inequality. This focus reflects his role as an investment commentator, offering cautionary advice to investors amid the hype surrounding AI technologies.

Key Citations

CNBC Video: We are deeply into bubble territory when it comes to AI, MBMG Group's Paul Gambles says

Artificial Intelligence and Its Potential Effects on the Economy and the Federal Budget

Economic Policy for Artificial Intelligence: Innovation Policy and the Economy: Vol 19

[1] Updated estimates suggest that the average individual may now be processing around 200 GB of data everyday

[2] “Why AI Struggles with Math” - Jajra

[3] As maths teachers tend to give as many if not more marks for ‘working out’ as for the right answer, middle school maths students probably don’t need to worry just yet about bots coming top of their class.

[4] https://www.nytimes.com/2024/07/23/technology/ai-chatbots-chatgpt-math.html

[5] According to Fabio Duarte “An almost incomprehensible amount of data is created every day. And each year, figures are growing at an ever-increasing rate…..

· Approximately 402.74 million terabytes of data are created each day

· Around 147 zettabytes of data will be generated this year

· 181 zettabytes of data will be generated in 2025

· Videos account for over half of internet data traffic

· The US has over 2,700 data centers [sic]” - https://explodingtopics.com/blog/data-generated-per-day (we noted with interest that when we visited the banner ad for this url posed the question “Want to Spy on Your Competition?” which it answered by promising to allow respondents to “Explore competitors’ website traffic stats, discover growth points, and expand your market share.” This is a good barometer as to the nature of the competition for data insights.

[6] https://www.researchgate.net/publication/368666866_The_Cambridge_Analytica_Scandal_and_Its_Impact_on_Meta